🚀 Try the live Streamlit demo here → https://huggingface.co/spaces/AtlaAI/StartupScan

To see this Agno workflow in action and live monitoring on Atla.

Multi-agent workflows often fail silently in production, and teams waste precious days debugging complex agent traces. This example shows how Agno orchestrates a robust market validation tool across multiple agents, then Atla automatically detects failures, identifies patterns, and generates & implements improvements.

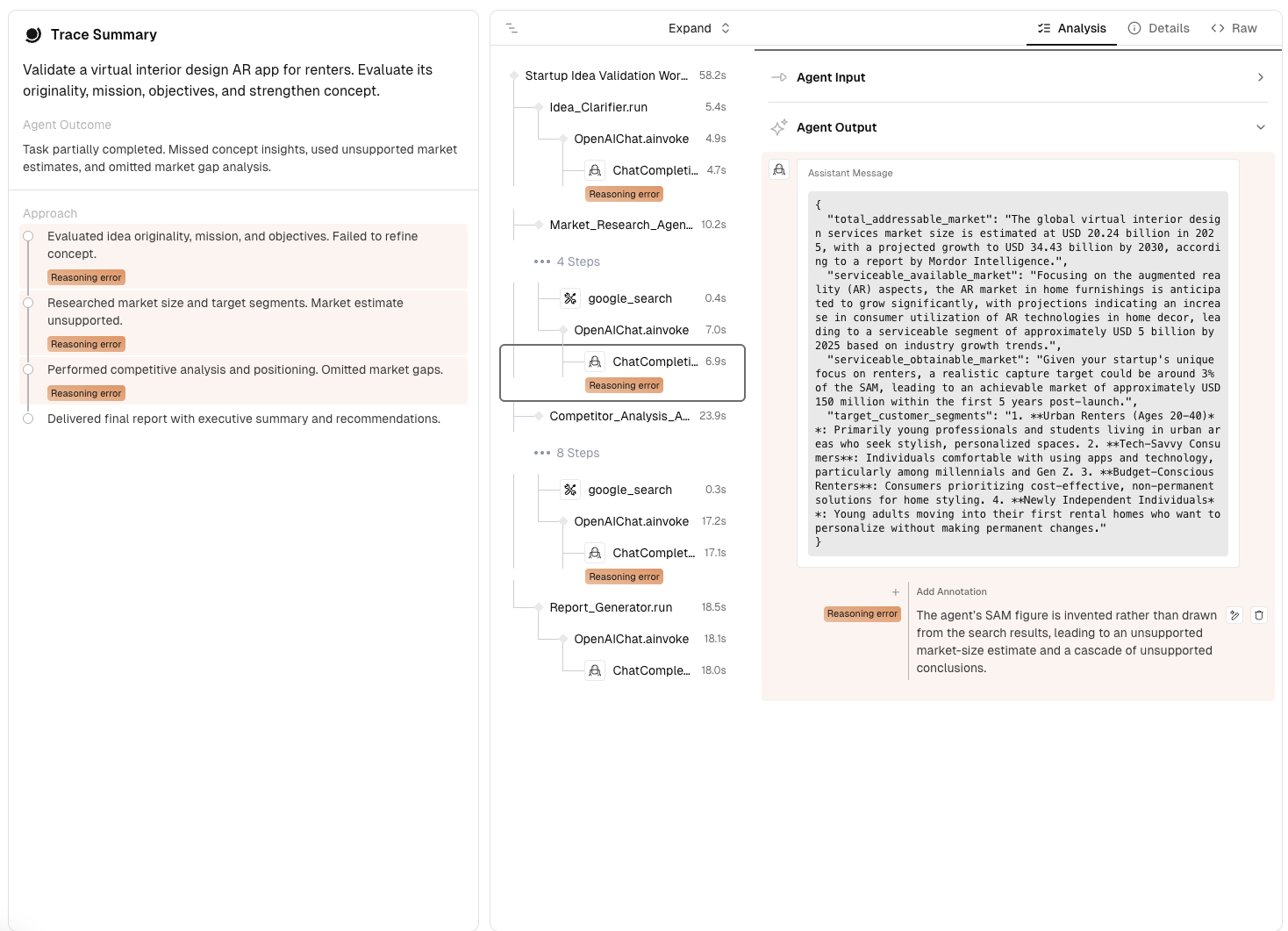

Validating a startup idea typically requires research across multiple domains—market analysis, competitive landscape assessment, and strategic positioning. This example automates the entire process using four specialized agents:

- Idea Clarifier Agent: Refines and evaluates concept originality

- Market Research Agent: Analyzes TAM/SAM/SOM and customer segments

- Competitor Analysis Agent: Performs SWOT analysis and positioning assessment

- Report Generator Agent: Synthesizes findings into actionable recommendations

Why Agno for Multi-Agent Workflow Orchestration

Most frameworks look great in demos but fail under production complexity such as concurrent users, long-running sessions, and multi-agent coordination. Agno is purpose-built for these challenges with production performance (~3μs agent instantiation, <1% framework overhead), pure Python workflows (no proprietary DSL to learn—AI code editors can write workflows), and enterprise-ready architecture (multi-user sessions, built-in state management, native FastAPI integration).

In this startup validation workflow, Agno orchestrates 4 specialized agents across 6-8 web searches with 99%+ reliability, something that would require months of custom infrastructure with alternatives.

Declarative Workflow Definition

Agno makes coordinating multiple specialized agents straightforward. The workflow definition is clean and declarative:

And each agent is configured separately with specific tools, capabilities, and instructions:

Guaranteed Structured Outputs (eliminates #1 cause of workflow failures)

One of Agno's most useful features is its Pydantic integration for structured responses. This eliminates the typical pain of parsing unstructured LLM outputs:

Real-Time Progress Tracking

Agno supports progress callbacks out of the box:

Adding Agent Evaluation + Optimization with Atla

While Agno handles workflow orchestration, Atla provides the monitoring + improvement layer needed for production deployments.

Simple Integration Setup

Adding comprehensive monitoring requires just a few lines:

Atla Workflow

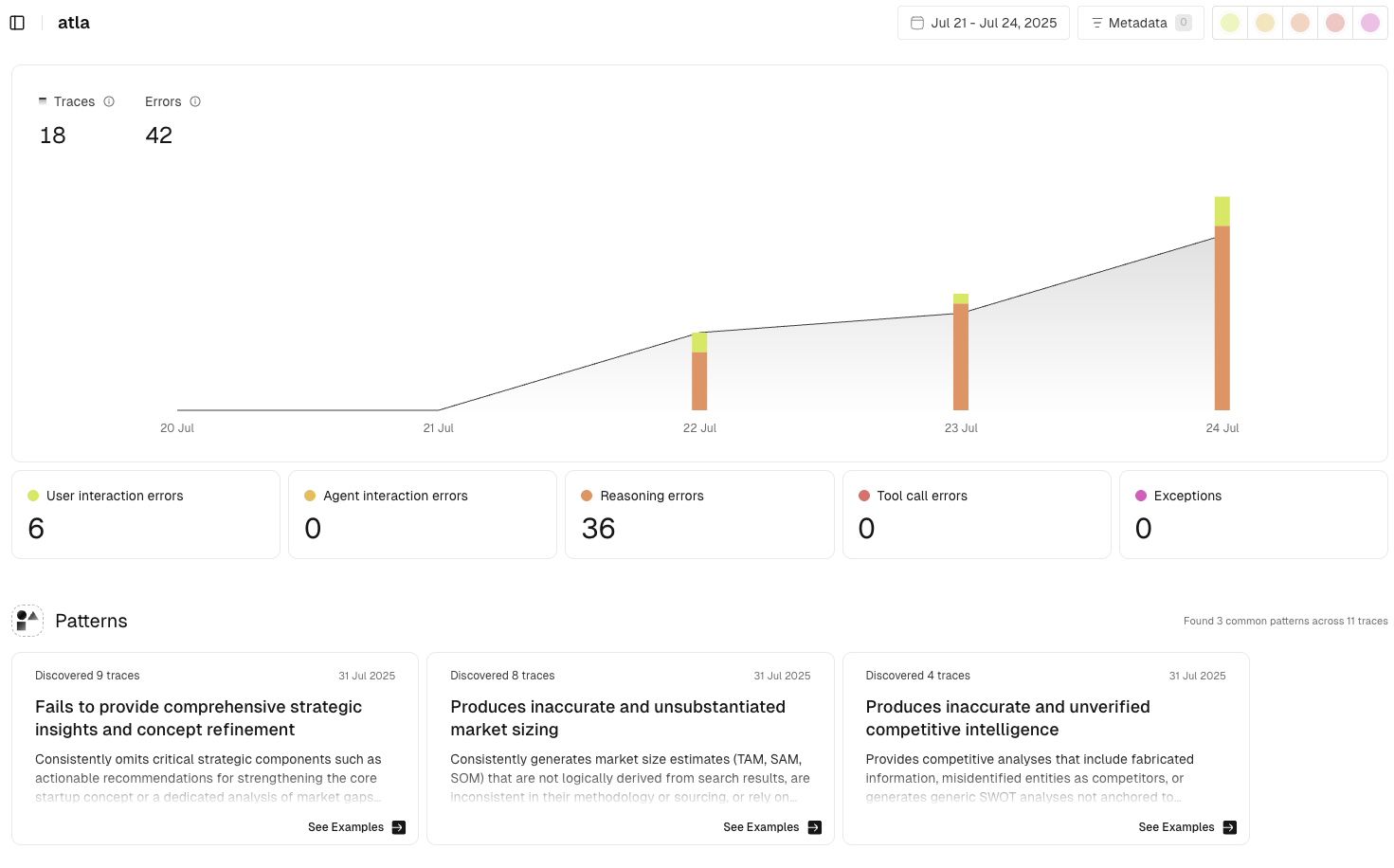

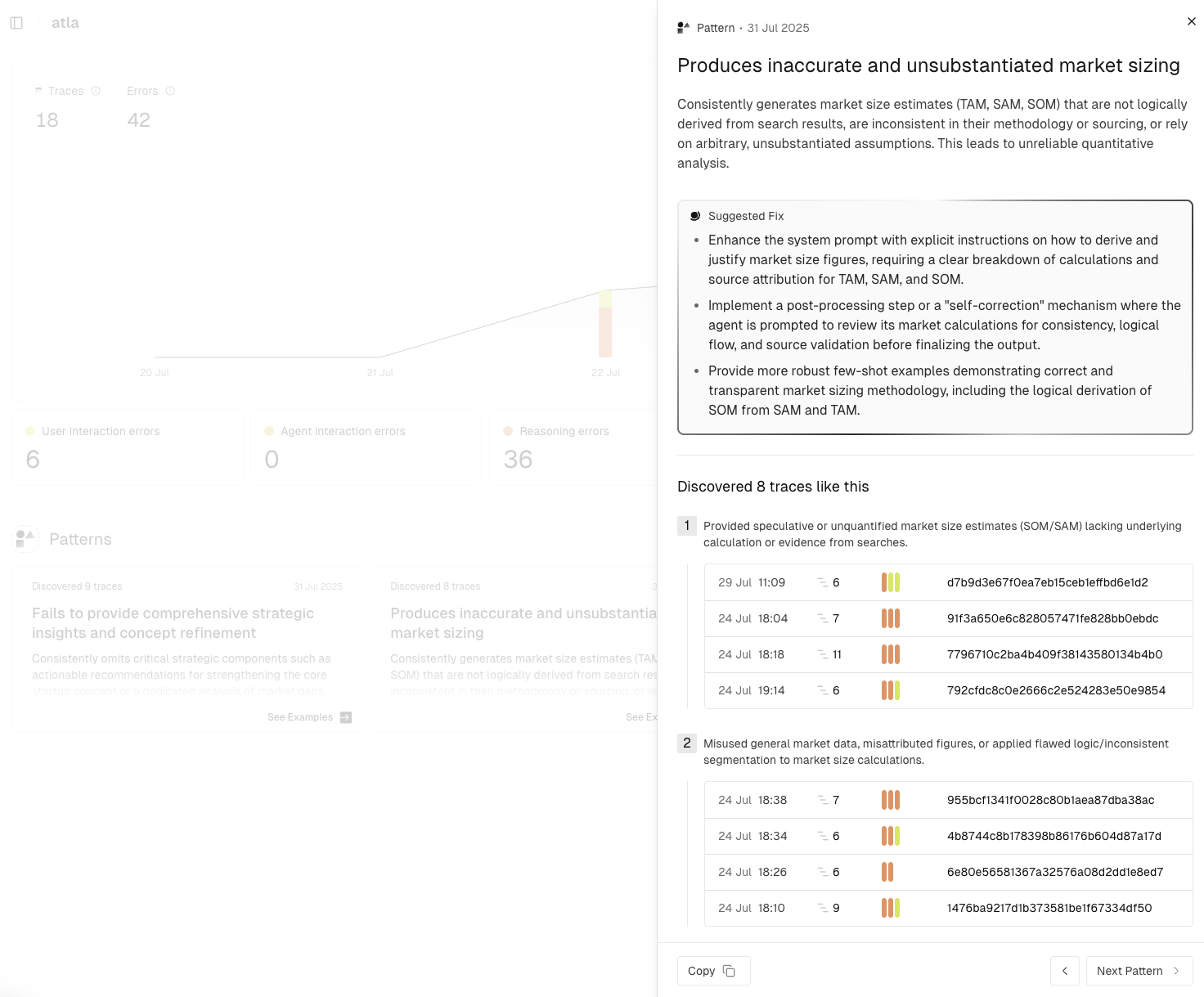

1. Error Pattern Identification

Find error patterns across your agent traces to understand systematically how your agent fails.

2. Span-Level Error Analysis

Rather than just logging failures, analyzes each step of the workflow execution. Identify errors across:

- User interaction errors — where the agent was interacting with a user.

- Agent interaction errors — where the agent was interacting with another agent.

- Reasoning errors — where the agent was thinking internally to itself.

- Tool call errors — where the agent was calling a tool.

3. Error Remediation

Directly implement Atla’s suggested fixes with Claude Code using our Vibe Kanban integration, or pass our instructions on to your coding agent via “Copy for AI”.

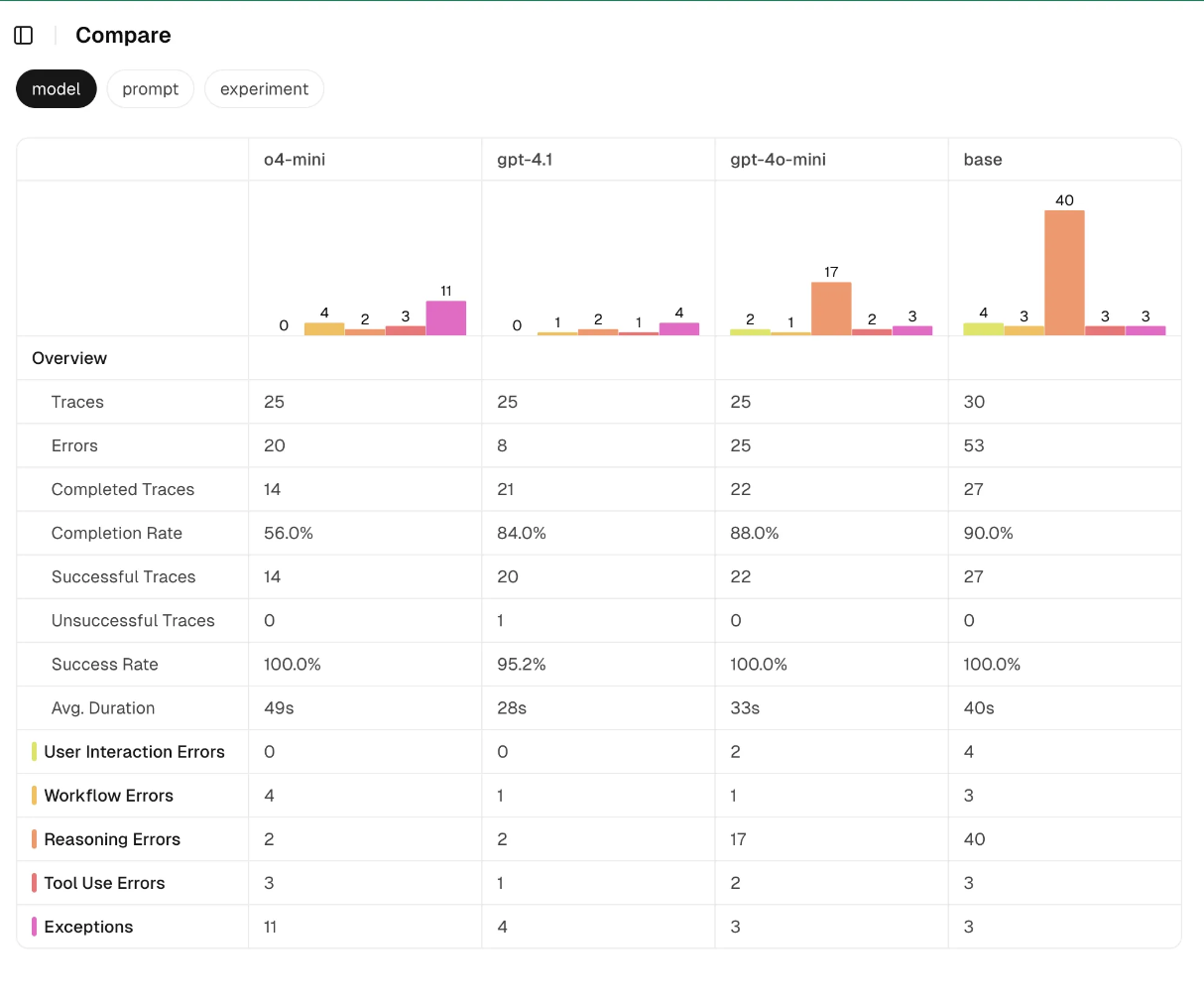

4. Experimental Comparison

Run experiments and compare performance to confidently improve your agents.

Get Started Today

Installation

Environment Setup

Resources

.png)